The 1st International Workshop on Language Models and Programming Languages

Generative artificial intelligence, exemplified by large language models (LLMs), is reshaping various aspects of programming languages, significantly simplifying many stages of software development. In recent years, many traditional areas of the programming languages (PL) community, such as program analysis and verification, program synthesis, and compiler optimization, have been profoundly influenced by LLMs, creating new opportunities for advancement. Despite their rapid progress, the inherent limitations of LLMs, particularly context length constraints, hallucinations, and high inference costs, restrict their effective application to PL tasks and other real-world problems.

As two distinct computational paradigms, neural networks represented by LLMs and traditional computation models based on programming languages have unique strengths and characteristics. To encourage researchers from the PL and ML communities to collaboratively address these challenges, we will host the International Workshop on Language Models and Programming Languages (LMPL) at SPLASH 2025, alongside other co-hosted PL conferences and workshops. We envision this new workshop as a platform for researchers from diverse backgrounds to engage in insightful exchanges, fostering synergy between traditional PL techniques and cutting-edge generative AI research, and driving innovation in these fields.

The workshop aims to achieve the following goals:

-

Facilitate discussions among researchers on the primary challenges of LLM-driven solutions for PL problems and other LLM-driven applications.

-

Provide a platform for researchers to exchange novel ideas and preliminary findings at the intersection of programming language techniques and language models.

-

Establish a forum for academia and industry professionals to bridge the gap between academic research and industry requirements, encouraging the practical application of programming language methodologies and language models to tackle real-world challenges.

Conference website: https://conf.researchr.org/home/icfp-splash-2025/lmpl-2025

Submission website: https://lmpl25.hotcrp.com

Keynote Speaker

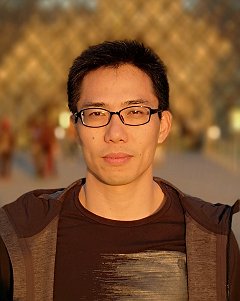

Jun Sun

Title: AI Safety through Programming?

Abstract: The rise of large AI models has amplified concerns about safety, reliability, and accountability in critical domains. Traditional programming languages research has long confronted similar challenges: how to specify intended behavior, prevent errors, and enforce guarantees through design. Yet modern AI systems often appear unprogrammable—opaque, stochastic, and lacking explicit semantics. In this talk, I will ask whether programming can still serve as a foundation for AI safety. I will discuss how concepts such as specification, semantics, and verification might be reimagined for AI-based systems, and share insights from our recent research that explores programming-inspired approaches to making AI systems more predictable and trustworthy.

Bio: Jun Sun is a Professor at Singapore Management University. He earned his Bachelor’s and Ph.D. degrees in Computer Science from the National University of Singapore in 2002 and 2006, respectively, and has been a faculty member since 2010. Professor Sun’s research interests span formal methods, AI safety, and software engineering. He is passionate about designing algorithms to solve challenging real-world problems and is equally devoted to enjoying life. He has published extensively in top venues, with several ACM Distinguished Paper Awards. For more information, please visit his website: https://sunjun.site.

Wed 15 OctDisplayed time zone: Perth change

10:10 - 10:50 | |||

10:10 40mCoffee break | Break Catering | ||

10:50 - 12:05 | |||

10:50 75mKeynote | AI Safety through Programming? LMPL Jun Sun Singapore Management University | ||

12:10 - 13:40 | |||

12:10 90mLunch | Lunch Catering | ||

13:40 - 15:20 | LLMs for Program Analysis and Verification ILMPL at Orchid East Chair(s): Puzhuo Liu Ant Group & Tsinghua University | ||

13:40 15mTalk | Function Renaming in Reverse Engineering of Embedded Device Firmware with ChatGPT LMPL Puzhuo Liu Ant Group & Tsinghua University, Peng Di Ant Group & UNSW Sydney, Yu Jiang Tsinghua University | ||

13:55 15mTalk | Enhancing Semantic Understanding in Pointer Analysis Using Large Language Models LMPL Baijun Cheng Peking University, Kailong Wang Huazhong University of Science and Technology, Ling Shi Nanyang Technological University, Haoyu Wang Huazhong University of Science and Technology, Yao Guo Peking University, Ding Li Peking University, Xiangqun Chen Peking University | ||

14:10 15mTalk | Improving SAST Detection Capability with LLMs and Enhanced DFArecorded LMPL Yuan Luo Tencent Security Yunding Lab, Zhaojun Chen Tencent Security Yunding Lab, Yuxin Dong Peking University, Haiquan Zhang Tencent Security Yunding Lab, Yi Sun Tencent Security Yunding Lab, Fei Xie Tencent Security Yunding Lab, Zhiqiang Dong Tencent Security Yunding Lab | ||

14:25 15mTalk | ClearAgent: Agentic Binary Analysis for Effective Vulnerability Detection LMPL Xiang Chen The Hong Kong University of Science and Technology, Anshunkang Zhou The Hong Kong University of Science and Technology, Chengfeng Ye The Hong Kong University of Science and Technology, Charles Zhang The Hong Kong University of Science and Technology | ||

14:40 15mTalk | CG-Bench: Can Language Models Assist Call Graph Construction in the Real World?recorded LMPL Ting Yuan , Wenrui Zhang Huawei Technologies Co., Ltd, Dong Chen Huawei Technologies Co., Ltd, Jie Wang Huawei Technologies Co., Ltd Pre-print | ||

14:55 20mTalk | Beyond Static Pattern Matching? Rethinking Automatic Cryptographic API Misuse Detection in the Era of LLMs LMPL | ||

15:20 - 16:00 | |||

15:20 40mCoffee break | Break Catering | ||

16:00 - 17:40 | LLMs for Program Analysis and Verification IILMPL at Orchid East Chair(s): Zhuo Zhang Columbia University | ||

16:00 15mTalk | Hallucination-Resilient LLM-Driven Sound and Tunable Static Analysis LMPL | ||

16:15 20mTalk | Toward Repository-Level Program Verification with Large Language Models LMPL DOI Pre-print | ||

16:35 15mTalk | Preguss: It Analyzes, It Specifies, It Verifies LMPL Zhongyi Wang Zhejiang University, China, Tengjie Lin Zhejiang University, Mingshuai Chen Zhejiang University, Mingqi Yang Zhejiang University, Haokun Li Peking University, Xiao Yi The Chinese University of Hong Kong, Shengchao Qin Xidian University, Jianwei Yin Zhejiang University | ||

16:50 20mTalk | A Case Study on the Effectiveness of LLMs in Verification with Proof Assistants LMPL Barış Bayazıt University of Toronto, Yao Li Portland State University, Xujie Si University of Toronto DOI Pre-print | ||

17:10 20mTalk | Understanding Formal Reasoning Failures in LLMs as Abstract Interpretersremote LMPL Jacqueline Mitchell University of Southern California, Brian Hyeongseok Kim University of Southern California, Chenyu Zhou University of Southern California, Chao Wang University of Southern California | ||

16:00 - 17:40 | |||

16:00 15mTalk | Vibe Coding Needs Vibe Reasoning – Improving Vibe Coding with Formal Verificationremote LMPL | ||

16:15 15mTalk | Current Practices for Building LLM-Powered Reasoning Tools Are Ad Hoc—and We Can Do Better LMPL Aaron Bembenek The University of Melbourne Pre-print | ||

16:30 15mTalk | Composable Effect Handling for Programming LLM-integrated Scripts LMPL Di Wang Peking University Pre-print | ||

16:45 15mTalk | The LLM Era Demands Natural-Language-Aligned Theorem Provers for Mathematics LMPL Qinxiang Cao Shanghai Jiao Tong University, Lihan Xie Shanghai Jiao Tong University, Junchi Yan Shanghai Jiao Tong University | ||

17:00 15mTalk | Programming Large Language Models with Algebraic Effect Handlers and the Selection Monad LMPL Shangyin Tan University of California, Berkeley, Guannan Wei Tufts University, Koushik Sen University of California at Berkeley, Matei Zaharia UC Berkeley | ||

Accepted Papers

Call for Papers

We invite submissions discussing recent advancements at the intersection of language models and programming languages. This workshop will offer researchers the opportunity to exchange ideas and explore emerging research directions. Specifically, LMPL focuses on programming language-related problems, including program analysis, verification, and optimization. It also explores how PL techniques, such as formal methods and PL design principles, contribute to language model applications. More specifically, the scope of LMPL includes, but is not limited to:

LLMs for PL tasks

- LLMs for static analysis, such as program verification, bug detection, and program optimization

- LLMs for code generation, such as program transpilation, synthesis, and repair

- LLMs for program testing, such as fuzzing and domain-specific system testing

- Other tasks in the fields of programming languages and software engineering

PL techniques for LLM applications

- PL techniques for prompt engineering

- PL techniques for agent design

- PL techniques for model training

- PL techniques for hallucination mitigation

- Other aspects where PL techniques can contribute to LLM applications

Benchmarks and Empirical Studies

- New benchmarks for specific PL tasks and empirical studies of existing LLM-driven PL techniques

- Empirical studies of existing benchmarks, such as the works summarizing or criticizing existing benchmarks

- Empirical studies of explainable AI in PL tasks, such as proposing and investigating a specific hypothesis

We welcome the following three formats of submissions:

-

Research paper: Similar to research papers presented at various conferences, these should include a well-designed methodology and experimental measurements

-

Position paper: Presenting forward-looking viewpoints or showcasing ideas that have not been thoroughly evaluated through experiments

-

Talk paper: Sharing one or more works that have already been published in other venues. There is no restriction on the venues as long as the topics of the works are in the targeted scope.

Evaluation Criteria

For Research Papers and Position Papers: Reviewers will evaluate each contribution for its soundness, significance, novelty, verifiability, and clarity. Submissions should clearly state how they are novel and how they improve upon existing work. We will employ a double-blind review process. Thus, no submission may reveal its authors’ identities. The authors must make every effort to honor the double-blind review process. In particular, the authors’ names must be omitted from the submission and references to their prior work should be in the third person.

For Talk Papers: Reviewers will assess whether the topics of the published works align with the scope requirements. The talk papers will undergo a single-blind review process, where authors can include their names and institutional affiliations in their submissions.

Submission Instructions

We will use HotCRP as the online submission system. Papers must be prepared in LaTeX, adhering to the ACM format available at http://sigplan.org/Resources/Author/#acmart-format using the sigplan option. Specifically, each kind of submissions should conform to the following requirements:

- Research papers: 8–10 pages (not including references). The accepted research papers will be included in the proceedings.

- Position papers: 2-4 pages (not including references). The authors can choose whether to publish their position papers in the proceedings.

- Talk papers: 1 page offering the following two kinds of information: (1) The abstract of the talk; (2) Papers that will be introduced in the talk. In principle, there is no limit on the number of works to be introduced, but due to time limits for the talk, it is recommended not to exceed three papers.

Submissions can be made via the submission site by the submission deadline. We encourage the authors to upload their paper info early (and can submit the PDF later) to properly enter conflicts for double-blind reviewing. If a submission is accepted, at least one author of the paper is required to attend the workshop and present the paper in person.

The official publication date of the workshop proceedings is the date the proceedings are made available by ACM. This date may be up to two weeks prior to the first day of SPLASH 2025. The official publication date affects the deadline for any patent filings related to published work.