The 5th ACM/IEEE International Conference on Automation of Software Test (AST 2024)

Software pervasiveness in both industry and digital society, as well as the proliferation of Artificial Intelligence (AI) technologies are continuously leading to emerging needs from both software producers and consumers. Infrastructures, software components, and applications aim to hide their increasing complexity in order to appear more human-centric. However, the potential risk from design errors, poor integrations, and time-consuming engineering phases can result in unreliable solutions that can barely meet their intended objectives. In this context, Software Engineering processes keep demanding for the investigation of novel and further refined approaches to Software Quality Assurance (SQA). Software testing automation is a discipline that has produced noteworthy research in the last decade. The search for solutions to automatically test any concept of software is critical, and it encompasses several areas: from the generation of the test cases, test oracles, test stubs/mocks; through the definition of selection and prioritization criteria; up to the engineering of infrastructures governing the execution of testing sessions locally or remotely in the cloud.

AST continues with a long record of international scientific forums on methods and solutions to automate software testing. This year AST is the 5th edition of a conference that was formerly organized as workshops since 2006. The conference promotes high quality research contributions on methods for software test automation, and original case studies reporting practices in this field. We invite contributions that focus on: i) lessons learned about experiments of automatic testing in practice; ii) experiences of the adoption of testing tools, methods and techniques; iii) best practices to follow in testing and their measurable consequences; and iv) theoretical approaches that are applicable to the industry in the context of AST.

Authors of the best papers presented at AST 2024 will be invited to submit an extension of their work for possible inclusion in a special issue in Software Testing, Verification, and Reliability (STVR) journal

Mon 15 AprDisplayed time zone: Lisbon change

09:00 - 10:30 | Conference Opening & KeynoteAST 2024 at Amália Rodrigues Chair(s): Francesca Lonetti CNR-ISTI, Mehrdad Saadatmand RISE Research Institutes of Sweden | ||

09:00 30mOther | Chairs Welcome AST 2024 Christof J. Budnik Siemens Corporation, Corporate Technology, Jenny Li Kean University, USA, Mehrdad Saadatmand RISE Research Institutes of Sweden | ||

09:30 60mKeynote | Exploring the landscape: Software Testing in the AI Era AST 2024 Ina Schieferdecker TU Berlin Media Attached File Attached | ||

10:30 - 11:00 | |||

10:30 30mCoffee break | Break ICSE Catering | ||

11:00 - 12:30 | Session 1: Test CoverageAST 2024 at Amália Rodrigues Chair(s): Gilles Perrouin Fonds de la Recherche Scientifique - FNRS & University of Namur | ||

11:00 20mFull-paper | Mutation Coverage is not Strongly Correlated with Mutation Coverage AST 2024 | ||

11:20 20mFull-paper | Running a Red Light: An Investigation into Why Software Engineers (Occasionally) Ignore Coverage Checks AST 2024 Alexander Sterk Delft University of Technology, Mairieli Wessel Radboud University, Eli Hooten Sentry.io, Andy Zaidman Delft University of Technology DOI Pre-print | ||

11:40 20mFull-paper | Coverage-based Strategies for the Automated Synthesis of Test Scenarios for Conversational Agents AST 2024 Pablo C Canizares Autonomous University of Madrid, Spain, Daniel Ávila Autonomous University of Madrid, Sara Perez-Soler Universidad Autónoma de Madrid, Esther Guerra Universidad Autónoma de Madrid, Juan de Lara Autonomous University of Madrid Pre-print | ||

12:00 20mFull-paper | WallMauer: Robust Code Coverage Instrumentation for Android Apps AST 2024 Michael Auer University of Passau, Iván Arcuschin Moreno University of Buenos Aires, Argentina, Gordon Fraser University of Passau | ||

12:30 - 14:00 | |||

12:30 90mLunch | Lunch ICSE Catering | ||

14:00 - 15:30 | Session 2: Test GenerationAST 2024 at Amália Rodrigues Chair(s): Sarmad Bashir RISE Research Institutes of Sweden | ||

14:00 20mFull-paper | Using GitHub Copilot for Test Generation in Python: An Empirical Study AST 2024 Khalid El Haji Delft University of Technology, Carolin Brandt Delft University of Technology, Andy Zaidman Delft University of Technology DOI Pre-print | ||

14:20 20mFull-paper | Grammar-Based Action Selection Rules for Scriptless Testing AST 2024 Lianne V. Hufkens Open Universiteit, Fernando Pastor Ricós Universitat Politècnica de València, Beatriz Marín Universitat Politècnica de València, Tanja E. J. Vos Universitat Politècnica de València and Open Universiteit | ||

14:40 20mFull-paper | Fences: Systematic Sample Generation for JSON Schemas using Boolean Algebra and Flow Graphs AST 2024 Björn Otto Institute for Automation and Communication, OVGU Magdeburg, Tobias Kleinert Chair of Information and Automation Systems for Process and Material Technology, RWTH Aachen | ||

15:00 10mPoster | Generating Software Tests for Mobile Applications Using Fine-Tuned Large Language Models AST 2024 Jacob Hoffmann Institute AIFB, Karlsruhe Institue of Technology (KIT), Demian Frister Institute of Applied Informatics and Formal Description Methods (AIFB) Karlsruhe Institue of Technology (KIT) DOI | ||

15:30 - 16:00 | |||

15:30 30mCoffee break | Break ICSE Catering | ||

Tue 16 AprDisplayed time zone: Lisbon change

09:00 - 10:30 | Conference Opening & KeynoteAST 2024 at Amália Rodrigues Chair(s): Francesca Lonetti CNR-ISTI, Mehrdad Saadatmand RISE Research Institutes of Sweden | ||

09:15 15mOther | Chairs Welcome AST 2024 Christof J. Budnik Siemens Corporation, Corporate Technology, Jenny Li Kean University, USA, Mehrdad Saadatmand RISE Research Institutes of Sweden | ||

09:30 60mKeynote | The future of testing: Unleashing creativity with power of AI AST 2024 Marko Ivanković Google; Universität Passau File Attached | ||

10:30 - 11:00 | |||

10:30 30mCoffee break | Break ICSE Catering | ||

11:00 - 12:30 | Session 4: AI-based testingAST 2024 at Amália Rodrigues Chair(s): Mahshid Helali Moghadam Scania R&D | ||

11:00 20mFull-paper | Machine Learning-based Test Case Prioritization using Hyperparameter Optimization AST 2024 Md Asif Khan Ontario Tech University, Akramul Azim Ontario Tech University, Ramiro Liscano Ontario Tech University, Kevin Smith International Business Machines Corporation (IBM), Yee-Kang Chang International Business Machines Corporation (IBM), Qasim Tauseef International Business Machines Corporation (IBM), Gkerta Seferi International Business Machines Corporation (IBM) | ||

11:20 20mFull-paper | Testing for Fault Diversity in Reinforcement Learning AST 2024 Quentin Mazouni Simula Research Laboratory, Helge Spieker Simula Research Laboratory, Norway, Arnaud Gotlieb Simula Research Laboratory, Mathieu Acher University of Rennes, France / Inria, France / CNRS, France / IRISA, France | ||

11:40 20mFull-paper | FairPipes: Data Mutation Pipelines for Machine Learning Fairness AST 2024 Camille Molinier ESIR, University of Rennes, Paul Temple IRISA, Gilles Perrouin Fonds de la Recherche Scientifique - FNRS & University of Namur | ||

12:00 10mPoster | Reducing Workload in Using AI-based API REST Test Generation AST 2024 Benjamin Leu University of Applied Sciences and Arts Northwestern Switzerland, Jonas Volken University of Applied Sciences and Arts Northwestern Switzerland, Martin Kropp University of Applied Sciences and Arts Northwestern Switzerland, Nejdet Dogru Testifi GmbH, Craig Anslow Victoria University of Wellington, Robert Biddle Carleton University | ||

12:10 15mShort-paper | Identifying Performance Issues in Microservice Architectures through Causal Reasoning AST 2024 Luca Giamattei Università di Napoli Federico II, Antonio Guerriero Università di Napoli Federico II, Ivano Malavolta Vrije Universiteit Amsterdam, Cristian Mascia University of Naples Federico II, Roberto Pietrantuono Università di Napoli Federico II, Stefano Russo Università di Napoli Federico II | ||

12:30 - 14:00 | |||

12:30 90mLunch | Lunch ICSE Catering | ||

14:00 - 15:30 | Session 5. Test process optimizationAST 2024 at Amália Rodrigues Chair(s): Antonio Guerriero Università di Napoli Federico II | ||

14:00 15mShort-paper | Dynamic Test Case Prioritization in Industrial Test Result Datasets AST 2024 Alina Torbunova Åbo Akademi University, Per Erik Strandberg Westermo Network Technologies AB, Ivan Porres Åbo Akademi University | ||

14:15 20mFull-paper | PAFOT: A Position-Based Approach for Finding Optimal Tests of Autonomous Vehicles AST 2024 Victor Crespo-Rodriguez Monash University, Neelofar Neelofar Monash University, Aldeida Aleti Monash University DOI | ||

14:35 20mFull-paper | Evaluating String Distance Metrics for Reduction of Automatically Generated Test Suites AST 2024 Islam Elgendy University of Sheffield, Robert Hierons The University of Sheffield, Phil McMinn University of Sheffield | ||

14:55 20mFull-paper | An Overview of Microservice-Based Systems Used for Evaluation in Testing and Monitoring: A Systematic Mapping Study AST 2024 Stefan Fischer Software Competence Center Hagenberg, Pirmin Urbanke Software Competence Center Hagenberg, Rudolf Ramler Software Competence Center Hagenberg (SCCH), Monika Steidl University of Innsbruck, Michael Felderer German Aerospace Center (DLR) & University of Cologne | ||

15:30 - 16:00 | |||

15:30 30mCoffee break | Break ICSE Catering | ||

Accepted Papers

Call for Papers

Software pervasiveness in both industry and digital society, as well as the proliferation of Artificial Intelligence (AI) technologies are continuously leading to emerging needs from both software producers and consumers. Infrastructures, software components, and applications aim to hide their increasing complexity in order to appear more human-centric. However, the potential risk from design errors, poor integrations, and time-consuming engineering phases can result in unreliable solutions that can barely meet their intended objectives. In this context, Software Engineering processes and methods keep demanding for the investigation of novel and further refined approaches to Software Quality Assurance (SQA).

Software testing automation is a discipline that has produced noteworthy research in the last decades. The search for solutions to automatically test any concept of software is critical, and it encompasses several areas: from the generation of the test cases, test oracles, test stubs/mocks; through the definition of selection and prioritization criteria; up to the engineering of infrastructures governing the execution of testing sessions locally or remotely in the cloud.

Automation of Software Test (AST) conference continues with a long record of international scientific forums on methods and solutions to automate software testing. This year AST 2024 is the 5th edition of a conference that was formerly organized as workshops since 2006. The conference promotes high quality research contributions on methods for software test automation, and original case studies reporting practices in this field. We invite contributions that focus on: i) lessons learned about experiments of automatic testing in practice; ii) experiences of the adoption of testing tools, methods and techniques; iii) best practices to follow in testing and their measurable consequences; and iv) theoretical approaches that are applicable to the industry in the context of AST.

With the emergence of generative AI, its application to test automation is eminent. The special theme of this year is “Test automation for and with Generative AI”, with special sessions on applying test automation technologies to the testing of Generative AI applications, and using generative AI to facilitate test automation.

Topics of Interest

Submissions on the AST 2024 theme are especially encouraged, but papers on other topics relevant to the automation of software tests are also welcome. Topics of interest include, but are not limited to the following:

- AI for Automated Software Testing

- Testing for AI robustness, safety, reliability and security

- Testing of AI-based Systems

- Effective testing through explainable AI

- Test automation of large complex system

- Test Automation in Software Process and Evolution, DevOps, Agile, CI/CD flows

- Metrics for testing - test efficiency, test coverage

- Tools for model-based V&V

- Test-driven development

- Standardization of test tools

- Test coverage metrics and criteria

- Product line testing

- Formal methods and theories for testing and test automation

- Test case generation based on formal, semi-formal and AI models

- Testing with software usage models

- Testing of reactive and object-oriented systems

- Software simulation by models, forecasts of behavior and properties

- Application of model checking in testing

- Tools for security specification, models, protocols, testing and evaluation

- Theoretical foundations of test automation

- Models as test oracles; test validation with models

- Testing anomaly detectors

- Testing cyber physical systems

- Automated usability and user experience testing

- Automated software testing for AI applications

We are interested in the following aspects related to AST:

- Problem identification. Analysis and specification of requirements for AST, and elicitation of problems that hamper wider adoption of AST

- Methodology. Novel methods and approaches for AST in the context of up-to-date software development methodologies

- Technology. Automation of various test techniques and methods for test-related activities, as well as for testing various types of software

- Tools and Environments. Issues and solutions in the development, operation, maintenance and evolution of tools and environments for AST, and their integration with other types of tools and runtime support platforms

- Empirical studies, Experience reports, and Industrial Contributions. Real experiences in using automated testing techniques, methods and tools in industry

- Visions of the future. Foresight and thought-provoking ideas for AST that can inspire new powerful research trends.

Authors of the best papers presented at AST 2024 will be invited to submit an extension of their work for possible inclusion in a special issue in Software Testing, Verification, and Reliability (STVR) journal

Submission

Four types of submissions are invited for both research and industry:

1. Regular Papers (up to 10 pages plus 2 additional pages of references)

- Research Paper

- Industrial Case Study

2. Short Papers (up to 4 pages plus 1 additional page of references)

- Research Paper

- Industrial Case Study

- Doctoral Student Research

3. Industrial Abstracts (up to 2 pages for all materials)

4. Lightning-talk/Poster Abstracts (up to 2 pages for all materials)

Instructions: Regular papers include both Research papers that present research in the area of software test automation, and Industrial Case Studies that report on practical applications of test automation. Regular papers must not exceed 10 pages for all materials (including the main text, appendices, figures, tables) plus 2 additional pages of references.

Short papers also include both Research papers and Industrial Case Studies. Short papers must not exceed 4 pages plus 1 additional page of references. As short papers, doctoral students working on software testing are encouraged to submit their work. AST will have an independent session to bring doctoral students working on software testing, with experts assigned to each paper together, to discuss their research in a constructive and international atmosphere, and to prepare for the defense exam. The first author in a submission must be the doctoral student and the second author the advisor. Authors of selected submissions will be invited to make a brief presentation followed by a constructive discussion in a session dedicated to doctoral students.

Industrial abstract talks are specifically conceived to promote industrial participation: We require the first author of such papers to come from industry. Authors of accepted papers get invited to give a talk with the same time length and within the same sessions as regular papers. Industrial abstracts must not exceed 2 pages for all materials.

The lightning-talk abstracts are for new ideas and proposed innovative research topics and directions, like a submission for a typical poster. No finished result is required, but the ideas should be novel and feasible in the current state of art. We expect all accepted papers to be presented in both slide presentation sessions and poster discussions.

The submission website is: https://easychair.org/conferences/?conf=ast2024

All submissions must adhere to the following requirements:

- The page limit is strict (10 pages plus 2 additional pages of references for full papers; 4 pages plus 1 additional page of references for short papers; 2 pages for all materials in case of industrial abstracts and lightning-talk abstracts). It will not be possible to purchase additional pages at any point in the process (including after acceptance).

- Submissions must strictly conform to the ACM formatting instructions. All submissions must be in PDF.

- LaTeX users can use the following code at the start of the document:

\documentclass[sigconf]{acmart}

\acmConference[AST 2024]{5th International Conference on Automation of Software Test}{April 2024}{Lisbon, Portugal}

- Submissions must be unpublished original work and should not be under review or submitted elsewhere while being under consideration. AST 2024 will follow the single-blind review process. In addition, by submitting to AST, authors acknowledge that they are aware of and agree to be bound by the ACM Policy and Procedures on Plagiarism and the IEEE Plagiarism FAQ. The authors also acknowledge that they conform to the authorship policy of the ACM and the authorship policy of the IEEE.

The accepted regular and short papers, case studies, and industrial abstracts and lightning-talk abstracts will be published in the ICSE 2024 Co-located Event Proceedings and included in the IEEE and ACM Digital Libraries. Authors of accepted papers are required to register and present their accepted paper at the conference in order for the paper to be included in the proceedings and the Digital Libraries.

The official publication date is the date the proceedings are made available in the ACM or IEEE Digital Libraries.

This date may be up to two weeks prior to the first day of ICSE 2024. The official publication date affects the deadline for any patent filings related to published work.

Keynotes

Exploring the landscape: Software Testing in the AI Era

Abstract. The introduction of AI-powered tools into software engineering is reshaping the entire software development lifecycle, impacting design, development, operations, and maintenance. AI plays already a central role in tasks such as code generation, test generation, or error detection, contributing to increased automation in software engineering processes. However, while AI excels at automating certain tasks, it lacks the nuanced human judgement and creativity required to comprehend broader contexts, understand intricate business logic, and make subjective decisions in software development. Testing, with its reliance on human expertise, remains essential for the holistic evaluation of software. Despite AI’s ability to generate code snippets based on patterns and data, there is a gap in capturing the intricacies of the intended business logic or software requirements. This has given rise to new research topics such as AI-driven requirements engineering or AI-guided test design in software engineering. In this keynote, we will take a comprehensive look at the current state of AI-driven software engineering. We will delve into selected aspects and highlight ongoing developments in the role of AI in software testing and its automation. By reviewing the state of the art, we aim to navigate the evolving intersection of AI and software testing, identifying opportunities, challenges, and the way forward in this dynamic landscape.

Speaker. Ina Schieferdecker

Bio. Ina Schieferdecker is an independent researcher and Honorary Professor of Software-Based Innovation at the Technische Universität Berlin. Previously, she was Director General for Research for Technological Sovereignty and Innovation at the German Federal Ministry of Education and Research (BMBF), Director of the Fraunhofer Institute for Open Communication Systems (FOKUS), Professor for Quality Engineering of Open Distributed Systems (QDS) at the Technische Universität Berlin (TUB), and Director of the Weizenbaum Institute for the Networked Society - The German Internet Institute. Ina is a member of the German Academy of Science and Engineering (acatech) and an honorary member of the German Testing Board (GTB). Ina’s research interests include quality engineering of open distributed systems, urban data platforms and open data, and digitalisation & transformation towards sustainability. Ina has received awards for her scientific work such as the German Prize for Software Quality (DPSQ), the EUREKA Innovation Award, or the Alfried Krupp von Bohlen und Halbach Award for Young Professors.

The future of testing: Unleashing creativity with power of AI

Abstract. Software testing requires discipline and attention to detail because it is monotonous and repetitive. At the same time, good testing is also a creative work of art. The software testing field has experienced a surge of recent advancements, fueled by generative AI. By automating the tedious and repetitive aspects of the work, AI is freeing up time and mental energy of engineers, letting them focus on the creative elements that elevate software quality. In this keynote, we’ll explore the nature of creativity in testing, drawing insights from other historically creative disciplines to illuminate the future of our field. We wonder: What can software testing learn from the use of generative AI in the visual and textual arts? I will encourage engineers working on testing to see their work through a new lens, embracing AI as a powerful tool to unleash their full creative potential in ensuring software excellence.

Speaker. Marko Ivanković

Bio. Marko Ivanković is a Senior Staff Software Engineer at Google Switzerland GmbH. He has an MSc in Computer Science from the University of Zagreb, Croatia. He has worked in the Developer Productivity group for 13 years, working on test quality analysis, test coverage, mutation testing, dead code detection, reliability and failure analysis, large scale code health maintenance and refactoring.

His recent research has focused on use of machine learning models in developer workflows, especially the topic of improving code quality using AI assistance.

Registration

Previous Editions

- 2023 - Melbourne, Australia

- 2022 - Pittsburgh, USA

- 2021 - Virtual (originally in Madrid, Spain)

- 2020 - Seoul - South Korea

- 2019 - Montreal - Canada

- 2018 - Gothenburg - Sweden

- 2017 - Buenos Aires - Argentina

- 2016 - Austin - Texas

- 2015 - Firenze - Italy

- 2014 - Hyderabad - India

- 2013 - San Jose California - USA

- 2012 - Zurich - Switzerland

- 2011 - Waikiki - Hawaii

- 2010 - Cape Town - South Africa

- 2009 - Vancouver - Canada

- 2008 - Leipzig - Germany

- 2007 - Minneapolis - USA

- 2006 - Shanghai - China

Awards

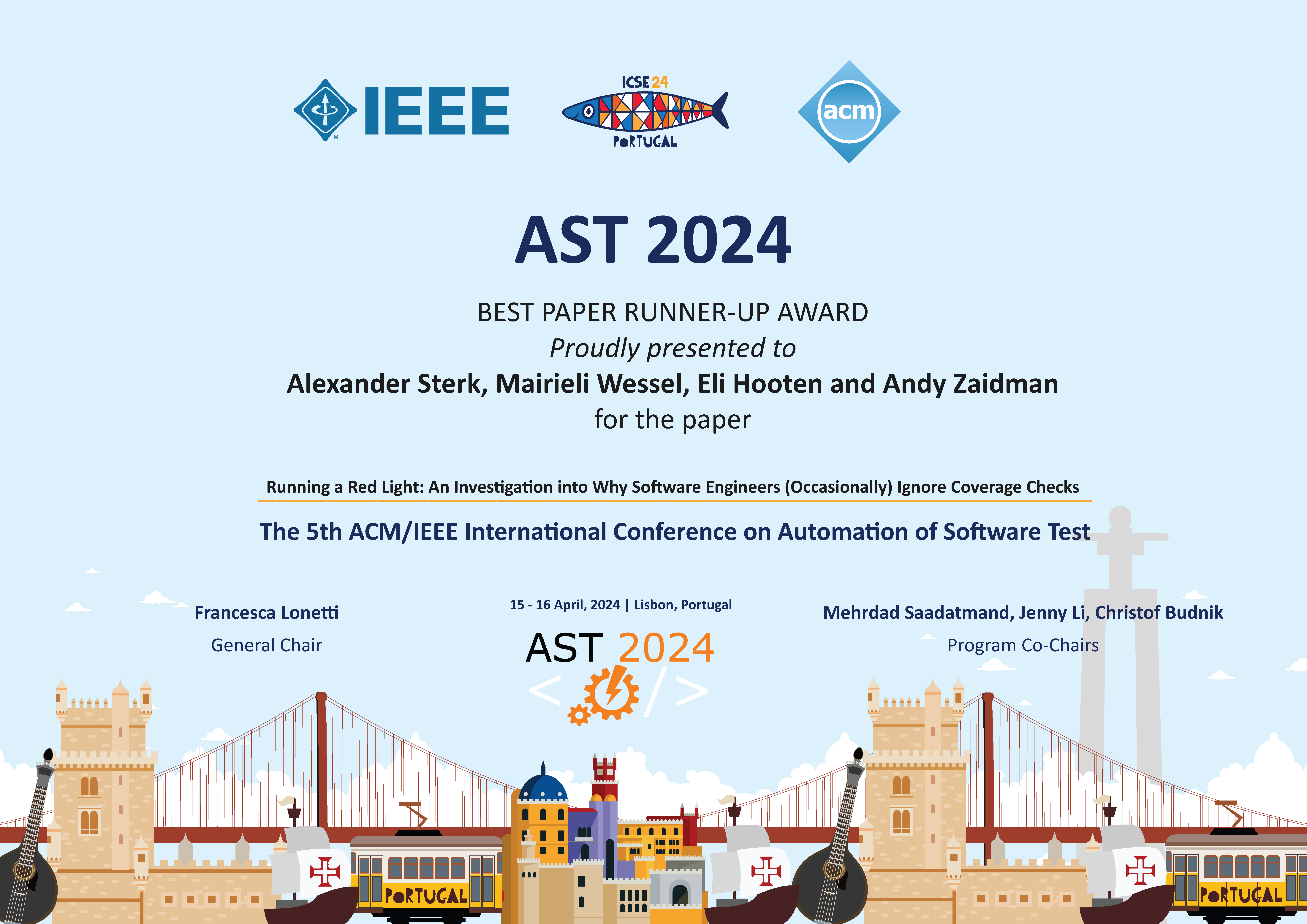

AST 2024 Best Paper Awards

- AST 2024 Best Paper Award: Quentin Mazouni, Helge Spieker, Arnaud Gotlieb and Mathieu Acher. Testing for Fault Diversity in Reinforcement Learning

- AST 2024 Runner-up Paper Award: Alexander Sterk, Mairieli Wessel, Eli Hooten and Andy Zaidman. Running a Red Light: An Investigation into Why Software Engineers (Occasionally) Ignore Coverage Checks

- AST 2024 Runner-up Paper Award: Michael Auer, Iván Arcuschin Moreno and Gordon Fraser. WallMauer: Robust Code Coverage Instrumentation for Android Apps

- AST 2024 Runner-up Paper Award: Elena Masserini, Davide Ginelli, Daniela Micucci, Daniela Briola and Leonardo Mariani. Anonymizing Test Data in Android: Does It Hurt?

Distinguished Reviewer Awards

- Markus Borg

- Porfirio Tramontana

- Breno Miranda