Mutation analysis involves mutations of software artifacts that are then used to evaluate the quality of software verification tools and techniques. It is considered the premier technique for evaluating the fault revealing effectiveness of test suites, test generation techniques, and other testing approaches.

Ideas derived from mutation analysis have also been used to test artifacts at different levels of abstraction, including requirements, formal specifications, models, architectural design notations and even informal descriptions. Recently, mutation has played an important role in software engineering for AI, such as in verifying trained models and behaviours. Furthermore, researchers and practitioners have investigated diverse forms of mutation, such as training or test data mutation, in combination with metamorphic testing to evaluate model performance in machine learning and detecting adversarial examples.

To be the premier forum for practitioners and researchers to discuss recent advances in the area of mutation analysis and propose new research directions, Mutation 2024 will feature keynote and invited talks, and will invite submissions of full and short length research paper, full and short length industry papers, and ‘Hot Off the Press’ presentations.

Highlights

| Title | |

|---|---|

| Keynote: Mutation for AI and with AI Mutation K: Jie M. Zhang |

Tue 28 MayDisplayed time zone: Eastern Time (US & Canada) change

08:00 - 09:00 | BreakfastSocial | ||

08:00 60mOther | Breakfast & Registration Social | ||

09:00 - 10:30 | |||

09:30 5mDay opening | MUTATION opening Mutation | ||

09:35 55mKeynote | Keynote: Mutation for AI and with AI Mutation | ||

11:00 - 12:30 | |||

11:00 30mTalk | Mutant-Kraken: A Mutation Testing Tool for Kotlin Mutation Josue Morales Towson University, Lin Deng Towson University, Josh Dehlinger Towson University, Suranjan Chakraborty Towson University | ||

11:30 30mTalk | Timed Model-Based Mutation Operators for Simulink Models Mutation | ||

12:00 30mTalk | Improving the Efficacy of Testing Scientific Software: Insights from Mutation Testing Mutation | ||

14:00 - 15:30 | |||

14:00 30mTalk | Test Harness Mutilation Mutation Samuel Moelius Trail of Bits | ||

14:30 30mTalk | An Empirical Evaluation of Manually Created Equivalent Mutants Mutation Philipp Straubinger University of Passau, Alexander Degenhart University of Passau, Gordon Fraser University of Passau Pre-print | ||

15:00 30mTalk | A Study of Flaky Failure De-Duplication to Identify Unreliably Killed Mutants Mutation Abdulrahman Alshammari George Mason University, Paul Ammann George Mason University, USA, Michael Hilton Carnegie Mellon University, Jonathan Bell Northeastern University | ||

Accepted Papers

Call for Papers

NOTICE (29 Jan 2024): To give a bit of extra time to authors, we decided to have a soft deadline and allow the authors to update their submissions by Feb 04 only if they made initial submissions before Feb 01. Please, remember to register your paper and submit the title and abstract before Feb 01.

Mutation analysis involves mutation of software artifacts that are then used to evaluate the quality of software verification tools and techniques. It is considered the premier technique for evaluating the fault revealing effectiveness of test suites, test generation techniques and other testing approaches. Ideas derived from mutation analysis have also been used to test artifacts at different levels of abstraction, including requirements, formal specifications, models, architectural design notations and even informal descriptions. Recently, mutation has played an important role in software engineering for AI, such as in verifying learned models and behaviors. Furthermore, researchers and practitioners have investigated diverse forms of mutation, such as training data or test data mutation, in combination with metamorphic testing to evaluate model performance in machine learning and detecting adversarial examples.

Mutation 2024 aims to be the premier forum for practitioners and researchers to discuss recent advances in the area of mutation analysis and propose new research directions. We invite submissions of both full-length and short-length research papers and especially encourage the submission of industry practice papers.

Topics of Interest

Topics of interest include, but are not limited to, the following:

- Evaluation of mutation-based test adequacy criteria, and comparative studies with other test adequacy criteria.

- Formal theoretical analysis of mutation testing.

- Empirical studies on any aspects of mutation testing.

- Mutation based generation of program variants.

- Higher-order mutation testing.

- Mutation testing tools.

- Mutation for mobile, internet, and cloud based systems (e.g., addressing QoS, power consumption, stress testing, performance, etc.).

- Mutation for security and reliability.

- Novel mutation testing applications, and mutation testing in novel domains.

- Industrial experience with mutation testing.

- Mutation for artificial intelligence (e.g., data mutation, model mutation, mutation-based test data generation, etc.)

Types of Submissions

Five types of papers can be submitted to the workshop:

- Full papers (10 pages): Research, case studies.

- Short papers (6 pages): Research in progress, tools.

- Full industrial papers (6 pages): Applications and lessons learned in industry.

- Short industrial papers (2 pages): Mutation testing in practice reports.

- Hot Off the Press (1 page abstract): presentation of work recently published in other venues

Each paper must conform to the two columns IEEE conference publication format (please use the letter format template and conference option) and must be submitted in PDF format via EasyChair. Submissions will be evaluated according to the relevance and originality of the work and to their ability to generate discussions between the participants of the workshop. Each submission will be reviewed by three reviewers, and all accepted papers will be published as part of the ICST proceedings (except for Hot Off the Press submissions).

Mutation 2024 will employ a double-anonymous review process (except for Hot Off the Press and industry submissions). Authors must make every effort to anonymize their papers to hide their identities throughout the review process. See the double-anonymous Q&A page for more information.

Industry papers

Industry papers should be given the keyword “industry” and are not subject to the double anonymity policy.

Hot Off the Press

Hot Off the Press submissions should contain 1) a short summary of the paper’s contribution, 2) an explanation of why those results are particularly interesting for MUTATION attendees, 3) a link to the paper. Their title should start with “HOP:” The original paper should be published no earlier than January 1st 2022.

Important Dates

- Submission deadline: January 29, 2024

- Notification of acceptance: February 26, 2024

- Camera-ready: March 1, 2024

- Workshop date: May 2024

Organization

- Renzo Degiovanni, University of Luxembourg, Luxembourg

- Thomas Laurent, Trinity College Dublin, Ireland

Keynote

Mutation for AI and with AI

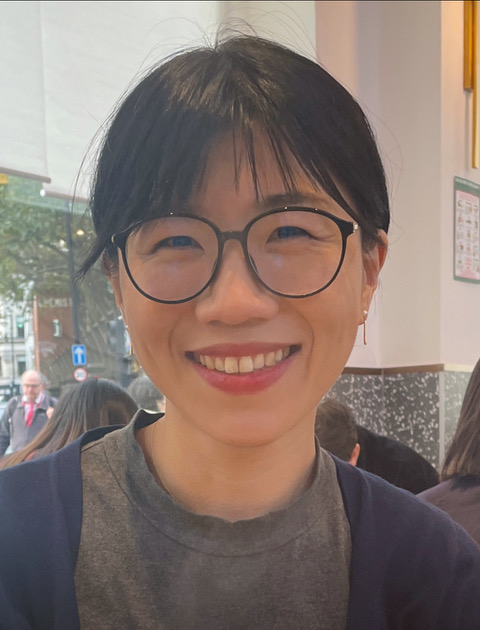

by Jie M. Zhang

Abstract

Traditional Mutation Testing applies a fixed set of mutation operators to generate mutants for the purpose of test assessment. However, the potential of mutants extends significantly beyond mere test evaluation.

In this talk, I will share my experiences in exploring the power the mutants in testing and improving AI trustworthiness (Mutation for AI) in various AI systems, as well as a recent practice that leverages large language models for more powerful mutants (AI for Mutation).

Bio

Dr. Jie M. Zhang is a lecturer (assistant professor) of computer science at King’s College London, UK. Before joining King’s she was a Research Fellow at University College London and a research consultant for Meta. She got her PHD degree at Peking University in 2018. Her main research interests are software testing, software engineering and AI/LLMs, and AI trustworthiness. She has published many papers in top-tier venues including ICLR, ICSE, FSE, ASE, ISSTA, TSE, and TOSEM. She is a steering committee member of ICST and AIware. She is a Program co-chair of AIware 2024, Internetware 2024, ASE 2023 NIER track, SANER 2023 Journal-First Track, PRDC 2023 Fast Abstract Track, SBST 2021, Mutation 2021&2020, and ASE 2019 Student Research Competition. Over the last three years, she has been invited to give over 20 talks at conferences, universities, and IT companies, including four keynote talks. She has also been invited as a panelist for several seminars on large language models. She has been selected as the top-fifteen 2023 Global Chinese Female Young Scholars in interdisciplinary AI. Her research has won the 2022 Transactions on Software Engineering Best Paper award and the ICLR 2021 spotlight paper award.

When

Tuesday 28th May at 9:30.