2001: A Space Odyssey Symposium - 50 years celebration

Tuesday, May 29, Afternoon

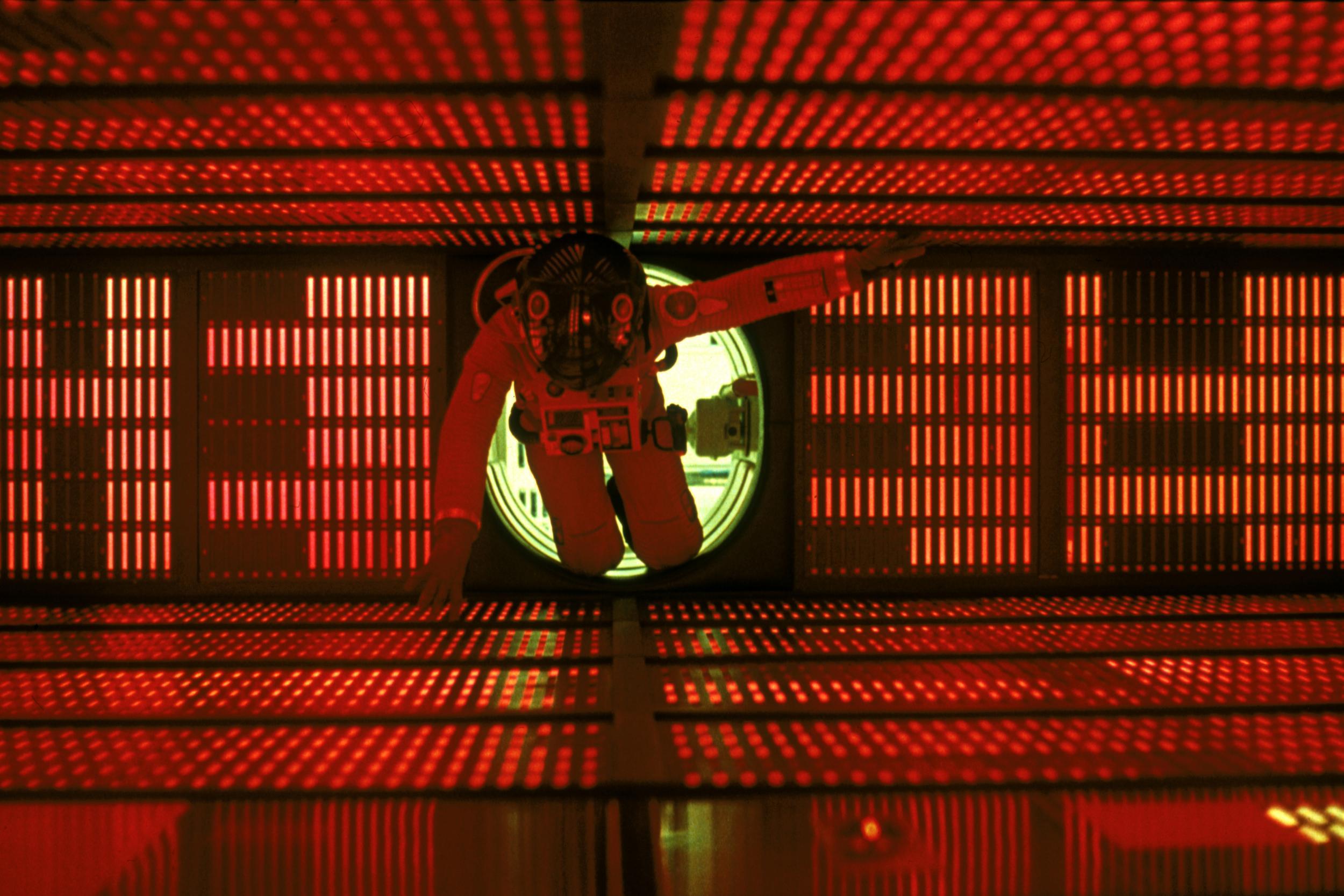

50 years of the movie 2001: A Space Odyssey

Along with the celebration of 50 years of Software engineering we also can celebrate the appearances of the famous science fiction move 2001: A Space Odyssey Symposium. One of the central parts in the move is the supercomputer HAL. HAL is the most powerful computer that one can image in that time, with knowledge superior to a human being, controlling the spaceship, finding solutions to the most complex problems, playing chess with the astronauts, and serving them continuously. And then something terribly went wrong, Why? What was the problem? Was it a (software) bug in the system, or is it about on intrinsic problem of AI? This is a question that the audience can state while watching the movie. 50 years after the movie was released, so the same number of years as for Software Engineering, we can state a number of similar, but also different questions: Would it be possible to design a computer today that could reach or outreach HAL’s capabilities? Can software of today implement the functions HAL had? Are the addressed problems beyoned a software implementation? What are the ethical questions and dangers of AI in such a context?

This symposium, a complementary one to the ICSE numerous technical tracks, will illuminate the movie with many details, and then discuss the questions that will arise from the presentation and from the panel debate. At the end there will be the move show!

The Symposium program will include:

| 14:00 - 15:30 | Keynote speech: HAL’s Legacy after 50 years of 2001, by David Stork |

| 16:00-17:30 | Panel: Will computers be able to do what HAL did? Panelists: Olle Häggström, Dorna Behdadi, Thore Husfeldt, Prem Devanbu, David Stork |

| 18:30 . 21:15 | The movie show: 2001: A Space Odyssey |

Keynote David G. Stork: HAL’s Legacy after 50 years of 2001 Space Odyssey

Fifty years ago the public experienced the first screening of what is widely considered the greatest and most influential science fiction film of all time: 2001: A Space Odyssey. More than any filmmakers before or since, writer Arthur C Clarke and Director Stanley Kubrick strove to get the science and technology right, to envision a technological future with as much accuracy and precision as possible. To this end they worked with experts from the American space agency NASA, and with electronics and computer corporations such as IBM, Honeywell, Pan Am Airlines, Hilton Hotels, and many many others, as well as leading academics such as artificial intelligence pioneer Marvin Minsky all to portray a compelling and realistic vision of 35 years later. Some of these visions proved remarkably accurate for the year 2001: Astronaut Frank Poole plays HAL in an enjoyable game of chess; in 1997 IBM’s Deep Blue triumphed over world champion Garry Kasparov in full tournament play. In other areas, such as computer vision, language understanding, and artificial intelligence, the filmmaker’s visions were too optimistic. What about now—50 years after the film’s release? It is clear that reality is finally catching up to—and in some cases exceeding—the film’s visions, in areas of computer hardware, computer vision, speech recognition, language understand, computer graphics and others. Most importantly, in 2018 serious scholars and technologists are taking seriously the ethical concerns and deep dangers of artificial intelligence, as was portrayed so compellingly in the landmark movie.

Keynote

Dr. David G. Stork

Dr. David G. Stork works in pattern recognition, machine learning, computer vision and computational sensing and imaging and is a pioneer in the application of rigorous computer image analysis to problems in the history and interpretation of fine art. He is a graduate in physics from MIT and the University of Maryland and has held faculty positions in Physics, Mathematics, Computer Science, Electrical Engineer, Statistics, Neuroscience, Psychology and Art and Art History variously at Wellesley and Swarthmore Colleges and Clark, Boston and Stanford Universities. Dr. Stork is a Fellow of IEEE, the Optical Society of America, SPIE, the International Association for Pattern Recognition, and the International Academy, Research and Industry Association and a Senior Member of the Association for Computing Machinery. He holds 51 US patents and his 200+ technical publications and eight books/proceedings volumes, which include Pattern classification (2nd ed.) and HAL's Legacy: 2001's computer as dream and reality, have garnered over 67,000 scholarly citations.

Dr. David G. Stork works in pattern recognition, machine learning, computer vision and computational sensing and imaging and is a pioneer in the application of rigorous computer image analysis to problems in the history and interpretation of fine art. He is a graduate in physics from MIT and the University of Maryland and has held faculty positions in Physics, Mathematics, Computer Science, Electrical Engineer, Statistics, Neuroscience, Psychology and Art and Art History variously at Wellesley and Swarthmore Colleges and Clark, Boston and Stanford Universities. Dr. Stork is a Fellow of IEEE, the Optical Society of America, SPIE, the International Association for Pattern Recognition, and the International Academy, Research and Industry Association and a Senior Member of the Association for Computing Machinery. He holds 51 US patents and his 200+ technical publications and eight books/proceedings volumes, which include Pattern classification (2nd ed.) and HAL's Legacy: 2001's computer as dream and reality, have garnered over 67,000 scholarly citations.

Olle Häggström is a professor of mathematical statistics at Chalmers University of Technology and a researcher at the Institute for Future Studies in Stockholm, as well as a member of the Royal Swedish Academy of Sciences. His foremost research achievements are probably in probability (no pun intended). The latest of his four books is Here Be Dragons: Science, Technology and the Future of Humanity (Oxford University Press, 2016).

Olle Häggström is a professor of mathematical statistics at Chalmers University of Technology and a researcher at the Institute for Future Studies in Stockholm, as well as a member of the Royal Swedish Academy of Sciences. His foremost research achievements are probably in probability (no pun intended). The latest of his four books is Here Be Dragons: Science, Technology and the Future of Humanity (Oxford University Press, 2016).

Thore Husfeldt is professor of Theoretical Computer Science at Lund University and a core researcher at Basic Algorithms Research Copenhagen at IT University of Copenhagen, Denmark. Besides his narrow research focus, he is a public debater and avid populariser of topics on the intersection of computer science and society, including hosting the Cast IT conversations at castit.itu.dk about foundations of IT.

Thore Husfeldt is professor of Theoretical Computer Science at Lund University and a core researcher at Basic Algorithms Research Copenhagen at IT University of Copenhagen, Denmark. Besides his narrow research focus, he is a public debater and avid populariser of topics on the intersection of computer science and society, including hosting the Cast IT conversations at castit.itu.dk about foundations of IT.

Prem Devanbu is Professor of Computer Science at the University of California at Davis. His main

research interests include understanding why software corpora are so repetitive and

predictable (aka "the Naturalness of Software"), and the application

of statistical NLP and Machine Learning methods to improving Software Tools

and Processes.

Prem Devanbu is Professor of Computer Science at the University of California at Davis. His main

research interests include understanding why software corpora are so repetitive and

predictable (aka "the Naturalness of Software"), and the application

of statistical NLP and Machine Learning methods to improving Software Tools

and Processes.

Dorna Behdadi is a PhD-student in practical philosophy at the department of philsolophy, linguistics and theory of science at GU. Their dissertation project focuses on the question of moral agency in other entities than human beings. What other non-human beings can be morally responsible for their actions? Can other animals be moral agents? What about artificial intelligences like autonomous machines or software? If yes, is their moral agency the same as ours? Or do they earn it by meeting a modified or wholly different set of criteria? What ethical, legal and practical consequences follow?

Dorna Behdadi is a PhD-student in practical philosophy at the department of philsolophy, linguistics and theory of science at GU. Their dissertation project focuses on the question of moral agency in other entities than human beings. What other non-human beings can be morally responsible for their actions? Can other animals be moral agents? What about artificial intelligences like autonomous machines or software? If yes, is their moral agency the same as ours? Or do they earn it by meeting a modified or wholly different set of criteria? What ethical, legal and practical consequences follow?