5th International Workshop on Artificial Intelligence in Software TestingAIST 2025

The integration of AI techniques in the domain of software testing represents a promising frontier, one that is still at the dawn of its potential. Over the past few years, software developers have witnessed a surge in innovative approaches aimed at streamlining the development lifecycle, with a particular focus on the testing phase. These approaches harness the capabilities of AI, including Convolutional Neural Networks (CNN), Deep Neural Networks (DNNs), and Large Language Models (LLMs), to transform the way we verify and validate software applications.

The adoption of AI in software testing yields numerous advantages. It significantly reduces the time and effort invested in repetitive and mundane testing tasks, allowing human testers to focus on more complex and creative aspects of testing, such as exploratory testing and user experience evaluation. Additionally, AI-driven testing improves software quality by enhancing test coverage and mutation score. The outcome is not just cost savings but also increased customer satisfaction, as the likelihood of critical software defects making it into production is greatly diminished.

The AIST workshop aspires to bring together a diverse community of researchers and practitioners. It aims to create a platform for the presentation and discussion of cutting-edge research and development initiatives in the areas of AI-driven software testing. The workshop encourages collaboration, facilitating the exchange of knowledge and ideas, and fostering a holistic understanding of the potential applications that AI offers in the context of software testing. By acknowledging the broad spectrum of perspectives and topics within the AI umbrella, AIST seeks to be a catalyst for innovation, ultimately ushering in a path for software testing efficiency and effectiveness.

Tue 1 AprDisplayed time zone: Amsterdam, Berlin, Bern, Rome, Stockholm, Vienna change

08:00 - 09:00 | |||

08:00 60mRegistration | Registration Social | ||

09:10 - 10:30 | |||

09:10 30mTalk | Adaptive Test Healing using LLM/GPT and Reinforcement Learning AIST | ||

09:40 30mTalk | A System for Automated Unit Test Generation Using Large Language Models and Assessment of Generated Test Suites AIST Andrea Lops Polytechnic University of Bari, Italy, Fedelucio Narducci Polytechnic University of Bari, Azzurra Ragone University of Bari, Michelantonio Trizio Wideverse, Claudio Bartolini Wideverse s.r.l. | ||

10:10 20mTalk | From Implemented to Expected Behaviors: Leveraging Regression Oracles for Non-Regression Fault Detection Using LLMs AIST Stefano Ruberto JRC European Commission, Judith Perera Fuchs University of Auckland, Gunel Jahangirova King's College London, Valerio Terragni University of Auckland | ||

10:30 - 11:00 | |||

10:30 30mCoffee break | Break Social | ||

11:00 - 12:30 | |||

11:00 90mPanel | Evaluating Large Language Models for Software Testing: the Good, the Bad, and the Ugly AIST Lionel Briand University of Ottawa, Canada; Lero centre, University of Limerick, Ireland, Vincenzo Riccio University of Udine, Annibale Panichella Delft University of Technology, Gunel Jahangirova King's College London | ||

14:00 - 15:30 | |||

14:00 90mKeynote | AI Testing AI: Creating positive feedback loops for improved AIST Robert Feldt Chalmers | University of Gothenburg, Blekinge Institute of Technology | ||

15:30 - 16:00 | |||

15:30 30mCoffee break | Break Social | ||

16:00 - 17:20 | |||

16:00 30mTalk | Generating Latent Space-Aware Test Cases for Neural Networks Using Gradient-Based Search AIST Simon Speth Technical University of Munich, Christoph Jasper TUM, Claudius Jordan , Alexander Pretschner TU Munich Pre-print | ||

16:30 20mTalk | Test2Text: AI-Based Mapping between Autogenerated Tests and Atomic Requirements AIST Elena Treshcheva Exactpro, Iosif Itkin Exactpro Systems, Rostislav Yavorskiy Exactpro Systems, A: Nikolai Dorofeev | ||

16:50 30mTalk | LLM Prompt Engineering for Automated White-Box Integration Test Generation in REST APIs (pre-recorded video presentation + online Q&A) AIST André Mesquita Rincon Federal Institute of Tocantins (IFTO) / Federal University of São Carlos (UFSCar), Auri Vincenzi Federal University of São Carlos, João Pascoal Faria Faculty of Engineering, University of Porto and INESC TEC | ||

Accepted Papers

Call for Papers

Theme and Goals

The integration of AI techniques in the domain of software testing represents a promising frontier, one that is still at the dawn of its potential. Over the past few years, software developers have witnessed a surge in innovative approaches aimed at streamlining the development lifecycle, with a particular focus on the testing phase. These approaches harness the capabilities of AI, including Convolutional Neural Networks (CNN), Deep Neural Networks (DNNs), and Large Language Models (LLMs), to transform the way we verify and validate software applications.

The adoption of AI in software testing yields numerous advantages. It significantly reduces the time and effort invested in repetitive and mundane testing tasks, allowing human testers to focus on more complex and creative aspects of testing, such as exploratory testing and user experience evaluation. Additionally, AI-driven testing improves software quality by enhancing test coverage and mutation score. The outcome is not just cost savings but also increased customer satisfaction, as the likelihood of critical software defects making it into production is greatly diminished.

The AIST workshop aspires to bring together a diverse community of researchers and practitioners. It aims to create a platform for the presentation and discussion of cutting-edge research and development initiatives in the areas of AI-driven software testing. The workshop encourages collaboration, facilitating the exchange of knowledge and ideas, and fostering a holistic understanding of the potential applications that AI offers in the context of software testing. By acknowledging the broad spectrum of perspectives and topics within the AI umbrella, AIST seeks to be a catalyst for innovation, ultimately ushering in a path for software testing efficiency and effectiveness.

Call for Papers

We invite novel papers from both academia and industry on AI applied to software testing that cover, but are not limited to, the following aspects:

- AI for test case design, test generation, test prioritization, and test reduction.

- AI for load testing and performance testing.

- AI for monitoring running systems or optimizing those systems.

- Explainable AI for software testing.

- Case studies, experience reports, benchmarking, and best practices.

- New ideas, emerging results, and position papers.

- Industrial case studies with lessons learned or practical guidelines.

Papers can be of one of the following types:

- Full Papers (max. 8 pages): Papers presenting mature research results or industrial practices.

- Short Papers (max. 4 pages): Papers presenting new ideas or preliminary results.

- Tool Papers (max. 4 pages): Papers presenting an AI-enabled testing tool. Tool papers should communicate the purpose and use cases for the tool. The tool should be made available (either free to download or for purchase).

- Position Papers (max. 2 pages): Position statements and open challenges, intended to spark discussion or debate.

The reviewing process is single blind. Therefore, papers do not need to be anonymized. Papers must conform to the two-column IEEE conference publication format and should be submitted via EasyChair using the following link: https://easychair.org/conferences/?conf=icst2025. You must select the 5th International Workshop on Artificial Intelligence in Software Testing as track for you submission.

All submissions must be original, unpublished, and not submitted for publication elsewhere. Submissions will be evaluated according to the relevance and originality of the work and on their ability to generate discussions between the participants of the workshop. Each submission will be reviewed by three reviewers, and all accepted papers will be published as part of the ICST proceedings. For all accepted papers, at least one author must register in the workshop and present the paper.

Important Dates

- Submission deadline (extended):

3 January 202510 January 2025 - Notification of acceptance: 3 February 2025

- Camera-ready: 13 February 2025

- Workshop: 1 April 2025

Keynote

AI Testing AI: Creating positive feedback loops for improved software quality

Date: 1st April 2025, 14:00 - 15:30

Room: Room B (Ground Floor)

Abstract

Large Language Models (LLMs) and (Generative) AI present unique testing challenges, but they also provide opportunities for self-reinforcing improvements in software quality. This talk explores how AI-based systems can not only be tested more effectively but also drive the evolution of software testing itself. Through LLM-assisted test automation and testing decision support, diversity-driven validation, and AI-powered GUI and API testing, we should be able to close the loop and create tools that continuously refine both the (AI-based) software they test and the testing strategies they use. This convergence of AI and software testing can open the door to ever-smarter, more autonomous, and more resilient software systems.

Speaker: Robert Feldt

Dr. Robert Feldt is a Professor of Software Engineering at Chalmers University of Technology in Gothenburg, where he is part of the Software Engineering division at the Department of Computer Science and Engineering. He is also a part-time Professor of Software Engineering at Blekinge Institute of Technology in Karlskrona, Sweden. He is co-Editor in Chief of Empirical Software Engineering (EMSE) Journal. He is interested in Software Engineering but with a particular focus on software testing, requirements engineering, psychological and social aspects as well as agile development methods/practices. He is “one of the pioneers” in the search-based software engineering field (according to an ACM Computing Survey of SBSE) and has a general interest in applying Artificial Intelligence and Machine Learning both during software development and, in general, within software systems. Based on his studies in Psychology he also tries to get more research focused on human aspects; an area we have proposed to call Behavioral Software Engineering.

Panel

Evaluating Large Language Models for Software Testing: the Good, the bad, and the Ugly

Date: 1st April 11:00 - 12:30

Room: Room B (Ground Floor)

Panel topic

The rapid advancement of large language models (LLMs) has opened new opportunities for the software testing community! However, aspects like randomness, exposure to data leakage or multiple configurable parameters make it challenging to adequately evaluate their potential.

This panel discussion brings together experts from the field to critically examine the methodological aspects of LLM evaluation in software testing research.

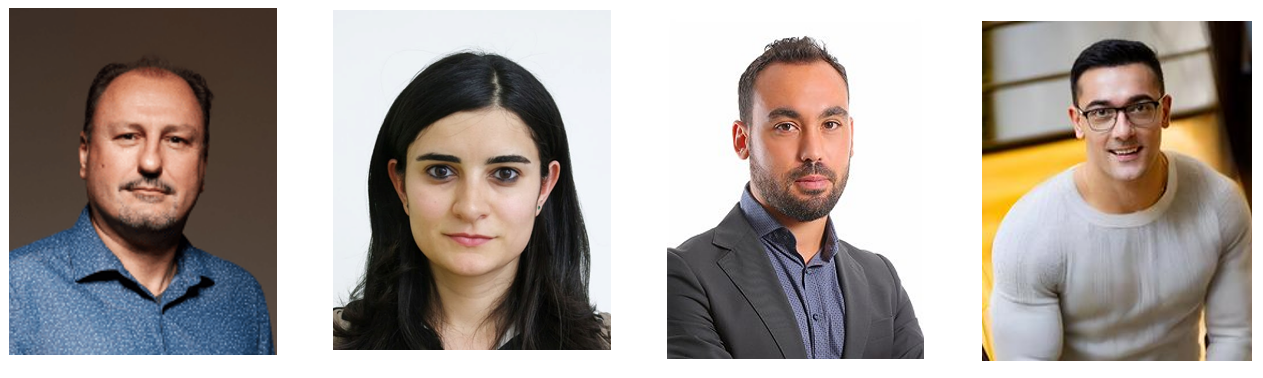

Panelists

Prof. Lionel Briand, Lero SFI Centre for Software Research and University of Limerick, Ireland and University of Ottawa, Canada

Prof. Gunel Jahangirova, King’s College London, United Kingdom

Prof. Vincenzo Riccio, University of Udine, Italy

Prof. Annibale Panichella, Delft University of Technology, the Netherlands