Reproducibility Studies and Negative Results (RENE) Track SANER 2025

Wed 5 MarDisplayed time zone: Eastern Time (US & Canada) change

07:30 - 17:00 | RegistrationResearch Papers at Atrium Lassonde (M-3500) The registration will be available throughout the day, at Atrium Lassonde. | ||

08:30 - 09:00 | Opening (Welcome from the GCs and PCs)Research Papers at Amphithéâtre Bernard Lamarre (C-631) Chair(s): Maria Teresa Baldassarre Department of Computer Science, University of Bari , Mohammad Hamdaqa Polytechnique Montréal, Foutse Khomh Polytechnique Montréal, Masud Rahman Dalhousie University | ||

09:00 - 10:30 | Keynote 1Research Papers at Amphithéâtre Bernard Lamarre (C-631) Chair(s): Maria Teresa Baldassarre Department of Computer Science, University of Bari , Masud Rahman Dalhousie University | ||

09:00 90mKeynote | Keynote 1: AI for Code Generation to Software Engineering Research Papers Baishakhi Ray Columbia University, New York; | ||

11:00 - 12:30 | Empirical Studies & LLMIndustrial Track / Research Papers / Reproducibility Studies and Negative Results (RENE) Track at L-1710 Chair(s): Diego Elias Costa Concordia University, Canada | ||

11:00 15mTalk | Beyond pip install: Evaluating LLM agents for the automated installation of Python projects Research Papers Louis Mark Milliken KAIST, Sungmin Kang National University of Singapore, Shin Yoo Korea Advanced Institute of Science and Technology Pre-print | ||

11:18 12mTalk | On the Compression of Language Models for Code: An Empirical Study on CodeBERT Research Papers Giordano d'Aloisio University of L'Aquila, Luca Traini University of L'Aquila, Federica Sarro University College London, Antinisca Di Marco University of L'Aquila Pre-print | ||

11:30 15mTalk | Can Large Language Models Discover Metamorphic Relations? A Large-Scale Empirical Study Research Papers Jiaming Zhang University of Science and Technology Beijing, Chang-ai Sun University of Science and Technology Beijing, Huai Liu Swinburne University of Technology, Sijin Dong University of Science and Technology Beijing | ||

11:45 15mTalk | Revisiting the Non-Determinism of Code Generation by the GPT-3.5 Large Language Model Reproducibility Studies and Negative Results (RENE) Track Salimata Sawadogo Centre d'Excellence Interdisciplinaire en Intelligence Artificielle pour le Développement (CITADEL), Aminata Sabané Université Joseph KI-ZERBO, Centre d'Excellence CITADELLE, Rodrique Kafando Centre d'Excellence Interdisciplinaire en Intelligence Artificielle pour le Développement (CITADEL), Tegawendé F. Bissyandé University of Luxembourg | ||

12:00 15mTalk | Language Models to Support Multi-Label Classification of Industrial Data Industrial Track Waleed Abdeen Blekinge Institute of Technology, Michael Unterkalmsteiner , Krzysztof Wnuk Blekinge Institute of Technology , Alessio Ferrari CNR-ISTI, Panagiota Chatzipetrou | ||

13:00 - 14:00 | |||

13:00 60mMeeting | Steering Committee Meeting Research Papers | ||

14:00 - 15:30 | Smart Contracts & MicroservicesResearch Papers / Industrial Track at L-1710 Chair(s): Anthony Cleve University of Namur | ||

14:00 15mTalk | LLM-based Generation of Solidity Smart Contracts from System Requirements in Natural Language: the AstraKode Case Industrial Track Gabriele De Vito Università di Salerno, Damiano D'Amici Damiano D'Amici, Head of Product and co-founder, AstraKode S.r.l., Fabiano Izzo Fabiano Izzo, CEO and co-founder, AstraKode S.r.l., Filomena Ferrucci University of Salerno, Dario Di Nucci University of Salerno | ||

14:15 15mTalk | Deep Smart Contract Intent Detection Research Papers Youwei Huang Institute of Intelligent Computing Technology, Suzhou, CAS, Sen Fang North Carolina State University, Jianwen Li , Bin Hu Institute of Computing Technology, Chinese Academy of Sciences, Jiachun Tao Suzhou City University, Tao Zhang Macau University of Science and Technology Pre-print | ||

14:30 15mTalk | Enhancing Microservice Migration Transformation from Monoliths with Graph Neural Networks Research Papers Deli Chen hainan university, Chunyang Ye Hainan University, Hui Zhou Hainan University, Shanyan Lai hainan university, Bo Li hainan university | ||

14:45 15mTalk | Specification Mining for Smart Contracts with Trace Slicing and Predicate Abstraction Research Papers Ye Liu , Yixuan Liu Nanyang Technological University, Yi Li Nanyang Technological University, Cyrille Artho KTH Royal Institute of Technology, Sweden | ||

15:00 15mTalk | Towards Change Impact Analysis in Microservices-based System Evolution Research Papers Tomas Cerny University of Arizona, Gabriel Goulis Systems and Industrial Engineering, University of Arizona, Amr Elsayed The University of Arizona Pre-print | ||

15:15 15mTalk | An Empirical Study on Microservices Deployment Trends, Topics and Challenges in Stack Overflow Research Papers Amina Bouaziz Laval University, Mohamed Aymen saied Laval University, Mohammed Sayagh ETS Montreal, University of Quebec, Ali Ouni ETS Montreal, University of Quebec, Mohamed Wiem Mkaouer University of Michigan - Flint | ||

14:00 - 15:30 | API and Dependency Analysis (Room: L-1720)Research Papers at L-1720 Chair(s): Raula Gaikovina Kula Osaka University | ||

14:00 15mTalk | Analysing Software Supply Chains of Infrastructure as Code: Extraction of Ansible Plugin Dependencies Research Papers Ruben Opdebeeck Vrije Universiteit Brussel, Bram Adams Queen's University, Coen De Roover Vrije Universiteit Brussel Pre-print | ||

14:15 15mTalk | Enhancing Automated Vulnerability Repair through Dependency Embedding and Pattern Store Research Papers Qingao Dong Beihang university, Yuanzhang Lin Beihang University, Xiang Gao Beihang University, Hailong Sun Beihang University | ||

14:30 15mTalk | Improving API Knowledge Comprehensibility: A Context-Dependent Entity Detection and Context Completion Approach using LLM Research Papers Zhang Zhang National University of Defense Technology, Xinjun Mao National University of Defense Technology, Shangwen Wang National University of Defense Technology, Kang Yang National University of Defense Technology, Tanghaoran Zhang National University of Defense Technology, Fei Gao National University of Defense Technology, Xunhui Zhang National University of Defense Technology, China | ||

14:45 15mTalk | Pay Your Attention on Lib! Android Third-Party Library Detection via Feature Language Model Research Papers Dahan Pan Shanghai Jiao Tong University, Yi Xu Shanghai Jiao Tong University, Runhan Feng Shanghai Jiao Tong University, Donghui Yu Shanghai Jiao Tong University, Jiawen Chen Shanghai Jiao Tong University, Ya Fang Shanghai Jiao Tong University, Yuanyuan Zhang Shanghai Jiao Tong University | ||

15:00 15mTalk | THINK: Tackling API Hallucinations in LLMs via Injecting Knowledge Research Papers Jiaxin Liu National University of Defense Technology, Yating Zhang National University of Defense Technology, Deze Wang National University of Defense Technology, Yiwei Li National University of Defense Technology, Wei Dong National University of Defense Technology | ||

16:00 - 17:00 | Townhall MeetingResearch Papers at Atrium Lassonde (M-3500) Chair(s): Rick Kazman University of Hawai‘i at Mānoa | ||

Thu 6 MarDisplayed time zone: Eastern Time (US & Canada) change

08:30 - 09:00 | MIP Announcement & TalkResearch Papers at Amphithéâtre Bernard Lamarre (C-631) Chair(s): Bram Adams Queen's University | ||

09:00 - 10:30 | Keynote 2 Research Papers at Amphithéâtre Bernard Lamarre (C-631) Chair(s): Maria Teresa Baldassarre Department of Computer Science, University of Bari , Masud Rahman Dalhousie University | ||

09:00 90mKeynote | Keynote 2: Source Code Diff Revolution Research Papers Nikolaos Tsantalis Concordia University | ||

11:00 - 12:30 | Software Testing & DebuggingResearch Papers at L-1710 Chair(s): Coen De Roover Vrije Universiteit Brussel | ||

11:00 15mTalk | A Multi-Language Tool for Generating Unit Tests from Execution Traces Research Papers Gabriel Darbord Inria, Nicolas Anquetil University of Lille, Lille, France, Anne Etien Université de Lille, CNRS, Inria, Centrale Lille, UMR 9189 –CRIStAL, Benoit Verhaeghe Berger-Levrault | ||

11:15 15mTalk | CSE-WSS: Code Structure Enhancement Framework with Weighted Semantic Similarity for Changeset-based Bug Localization Research Papers Zhenghao Liu School of Software Engineering, South China University of Technology, Guangzhou, China, Li Yuan School of Software Engineering, South China University of Technology, Guangzhou, China, Jiexin Wang School of Software Engineering, South China University of Technology, Guangzhou, China, Yi Cai School of Software Engineering, South China University of Technology, Guangzhou, China | ||

11:30 15mTalk | From Bug Reports to Workarounds: The Real-World Impact of Compiler Bugs Research Papers | ||

11:45 15mTalk | Optimizing Class Integration Testing with Criticality-Driven Test Order Generation Research Papers yanru ding China University of Mining and Technology, Yanmei ZHANG China University of Mining and Technology, Guan Yuan China University of Mining and Technology, Shujuan Jiang China University of Mining and Technology, Wei Dai China University of Mining and Technology, Luciano Baresi Politecnico di Milano | ||

12:00 15mTalk | Generating and Contributing Test Cases for C Libraries from Client Code: A Case Study Research Papers Ahmed Zaki Imperial College London, Arindam Sharma Imperial College London, Cristian Cadar Imperial College London | ||

11:00 - 12:30 | Software Analysis & Recommendation SystemsResearch Papers / Industrial Track / Early Research Achievement (ERA) Track at L-1720 Chair(s): Brittany Reid Nara Institute of Science and Technology | ||

11:00 15mTalk | A First Look at Package-to-Group Mechanism: An Empirical Study of the Linux Distributions Research Papers Dongming Jin Key Lab of High-Confidence of Software Technologies (PKU), Ministry of Education, NIANYU LI ZGC Lab, China, Kai Yang Zhongguancun Laboratory, Minghui Zhou Peking University, Zhi Jin Peking University | ||

11:15 15mTalk | Preprocessing is All You Need: Boosting the Performance of Log Parsers With a General Preprocessing Framework Research Papers Qiaolin Qin Polytechnique Montréal, Roozbeh Aghili Polytechnique Montréal, Heng Li Polytechnique Montréal, Ettore Merlo Polytechnique Montreal Pre-print | ||

11:30 7mTalk | Boosting Large Language Models for System Software Retargeting: A Preliminary Study Early Research Achievement (ERA) Track Ming Zhong SKLP, Institute of Computing Technology, CAS, Fang Lv Institute of Computing Technology, Chinese Academy of Sciences, Lulin Wang , Lei Qiu SKLP, Institute of Computing Technology, CAS; University of Chinese Academy of Sciences, Hongna Geng SKLP, Institute of Computing Technology, CAS, Huimin Cui Institute of Computing Technology, Chinese Academy of Sciences, Xiaobing Feng ICT CAS | ||

11:37 15mTalk | Analyzing Logs of Large-Scale Software Systems using Time Curves Visualization Industrial Track Dmytro Borysenkov , Adriano Vogel , Sören Henning Johannes Kepler University Linz, Esteban Pérez Wohlfeil | ||

11:52 15mTalk | Building Your Own Product Copilot: Challenges, Opportunities, and Needs Industrial Track Chris Parnin Georgia Tech, Gustavo Soares Microsoft, Rahul Pandita GitHub, Inc., Sumit Gulwani Microsoft, Jessica Rich , Austin Henley University of Tennessee | ||

12:07 15mTalk | Filter-based Repair of Semantic Segmentation in Safety-Critical Systems Industrial Track Sebastian Schneider , Tomas Sujovolsky , Paolo Arcaini National Institute of Informatics

, Fuyuki Ishikawa National Institute of Informatics, Truong Vinh Truong Duy | ||

11:00 - 12:30 | Program AnalysisResearch Papers at M-1410 Chair(s): Rrezarta Krasniqi University of North Carolina at Charlotte | ||

11:00 15mTalk | Adapting Knowledge Prompt Tuning for Enhanced Automated Program Repair Research Papers Pre-print | ||

11:15 15mTalk | A Metric for Measuring the Impact of Rare Paths on Program Coverage Research Papers | ||

11:30 15mTalk | A Progressive Transformer for Unifying Binary Code Embedding and Knowledge Transfer Research Papers Hanxiao Lu Columbia University, Hongyu Cai Purdue University, Yiming Liang Purdue University, Antonio Bianchi Purdue University, Z. Berkay Celik Purdue University | ||

11:45 15mTalk | Is This You, LLM? Recognizing AI-written Programs with Multilingual Code Stylometry Research Papers Andrea Gurioli DISI - University of Bologna, Maurizio Gabbrielli DISI - University of Bologna, Stefano Zacchiroli Télécom Paris, Polytechnic Institute of Paris Pre-print | ||

12:00 15mTalk | SpeedGen: Enhancing Code Efficiency through Large Language Model-Based Performance Optimization Research Papers Nils Purschke Technical University of Munich, Sven Kirchner Technical University of Munich, Alois Knoll Technical University of Munich | ||

12:15 15mTalk | StriCT-BJ: A String Constraint Benchmark from Real Java Programs Research Papers Chi Zhang Institute of Software at Chinese Academy of Sciences; University of Chinese Academy of Sciences, Jian Zhang Institute of Software at Chinese Academy of Sciences; University of Chinese Academy of Sciences | ||

14:00 - 15:30 | Defect Prediction & AnalysisResearch Papers / Industrial Track / Journal First Track at L-1710 Chair(s): Rrezarta Krasniqi University of North Carolina at Charlotte | ||

14:00 15mTalk | An ensemble learning method based on neighborhood granularity discrimination index and its application in software defect prediction Research Papers Yuqi Sha College of Information Science and Technology,Qingdao University of Science and Technology, Feng Jiang College of Information Science and Technology,Qingdao University of Science and Technology, Qiang Hu College of Information Science and Technology, Qingdao University of Science and technology, Yifan He Institute of Cosmetic Regulatory Science,Beijing Technology and Business University | ||

14:15 15mTalk | ALOGO: A Novel and Effective Framework for Online Cross-project Defect Prediction Research Papers Rongrong Shi Beijing Jiaotong University, Yuxin He Beijing Jiaotong University, Ying Liu Beijing Jiaotong University, Zonghao Li Beijing Jiaotong University, Jingxin Su Beijing Jiaotong University, Haonan Tong Beijing Jiaotong University | ||

14:30 15mTalk | Cross-System Software Log-based Anomaly Detection Using Meta-Learning Research Papers Yuqing Wang University of Helsinki, Finland, Mika Mäntylä University of Helsinki and University of Oulu, Jesse Nyyssölä University of Helsinki, Ke Ping University of Helsinki, Liqiang Wang University of Wyoming Pre-print | ||

14:45 15mTalk | RADICE: Causal Graph Based Root Cause Analysis for System Performance Diagnostic Industrial Track Andrea Tonon Huawei Ireland Research Center, Meng Zhang Shandong University, Bora Caglayan Huawei Ireland Research Center, Fei Shen Huawei Nanjing Research Center, Tong Gui , Mingxue Wang Huawei Ireland Research Center, Rong Zhou | ||

15:00 15mTalk | Can We Trust the Actionable Guidance from Explainable AI Techniques in Defect Prediction? Research Papers | ||

15:15 15mTalk | Making existing software quantum safe: A case study on IBM Db2 Journal First Track Lei Zhang University of Maryland Baltimore County, Andriy Miranskyy Toronto Metropolitan University (formerly Ryerson University), Walid Rjaibi IBM Canada Lab, Greg Stager IBM Canada Lab, Michael Gray IBM, John Peck IBM | ||

16:00 - 17:00 | Ask Me Anything Session (New Comers Onboarding)Research Papers at L-1710 Chair(s): Jeremy Bradbury Ontario Tech University, Foutse Khomh Polytechnique Montréal | ||

16:00 - 17:00 | Software EcosystemJournal First Track / Early Research Achievement (ERA) Track / Research Papers at M-2101 Chair(s): Chris Parnin Microsoft | ||

16:00 15mTalk | CapAssess: An Endeavor to Assess and Enhance Linux Capabilities Utilization Research Papers Jingzi Meng Institute of Information Engineering, Chinese Academy of Sciences, Yuewu Wang University of Chinese Academy of Sciences, Lingguang Lei Institute of Information Engineering, Chinese Academy of Sciences, Jiwu Jing University of Chinese Academy of Sciences, Pingjian Wang Institute of Information Engineering, Chinese Academy of Sciences, Chunjing Kou University of Chinese Academy of Sciences, Wang Peng University of Chinese Academy of Sciences | ||

16:15 7mTalk | Service Extraction from Object-Oriented Monolithic Systems: Supporting Incremental Migration Early Research Achievement (ERA) Track Soufyane Labsari Université Lille, CNRS, Centrale Lille, Inria, UMR 9189 - CRIStAL, Imen Sayar Univ. Lille, CNRS, Inria, Centrale Lille, UMR 9189 CRIStAL, F-59000 Lille, France, Nicolas Anquetil University of Lille, Lille, France, Benoit Verhaeghe Berger-Levrault, Anne Etien Université de Lille, CNRS, Inria, Centrale Lille, UMR 9189 –CRIStAL | ||

16:22 15mTalk | GitHub Marketplace for Automation and Innovation in Software Production Journal First Track Sk Golam Saroar York University, Waseefa Ahmed York University, Elmira Onagh York University, Maleknaz Nayebi York University | ||

16:37 15mTalk | Protect Your Secrets: Understanding and Measuring Data Exposure in VSCode Extensions Research Papers Pre-print | ||

Fri 7 MarDisplayed time zone: Eastern Time (US & Canada) change

09:00 - 10:30 | Keynote 3: IVADOResearch Papers at Amphithéâtre Bernard Lamarre (C-631) Chair(s): Foutse Khomh Polytechnique Montréal | ||

09:00 10mTalk | IVADO Presentation Research Papers | ||

09:10 40mKeynote | Keynote 3: Advances and Challenges in Foundation Agents: From Brain-Inspired Intelligence to Evolutionary, Collaborative, and Safe Systems Research Papers Bang Liu DIRO & Mila, Université de Montréal | ||

09:50 40mTalk | Keynote 4: LLMs: Facts, Lies, Reasoning and Software Agents in the Real World Research Papers Chris Pal Polytechnique Montreal | ||

11:00 - 12:30 | Change Management & Program ComprehensionReproducibility Studies and Negative Results (RENE) Track / Research Papers / Early Research Achievement (ERA) Track at L-1710 Chair(s): Masud Rahman Dalhousie University | ||

11:00 15mTalk | AdvFusion: Adapter-based Knowledge Transfer for Code Summarization on Code Language Models Research Papers Iman Saberi University of British Columbia Okanagan, Amirreza Esmaeili University of British Columbia, Fatemeh Hendijani Fard University of British Columbia, Chen Fuxiang University of Leicester | ||

11:15 15mTalk | EarlyPR: Early Prediction of Potential Pull-Requests from Forks Research Papers | ||

11:30 15mTalk | The Hidden Challenges of Merging: A Tool-Based Exploration Research Papers Luciana Gomes UFCG, Melina Mongiovi Federal University of Campina Grande, Brazil, Sabrina Souto UEPB, Everton L. G. Alves Federal University of Campina Grande | ||

11:45 7mTalk | On the Performance of Large Language Models for Code Change Intent Classification Early Research Achievement (ERA) Track Issam Oukay Department of Software and IT Engineering, ETS Montreal, University of Quebec, Montreal, Canada, Moataz Chouchen Department of Electrical and Computer Engineering, Concordia University, Montreal, Canada, Ali Ouni ETS Montreal, University of Quebec, Fatemeh Hendijani Fard University of British Columbia | ||

11:52 15mTalk | Revisiting Method-Level Change Prediction: Comparative Evaluation at Different Granularities Reproducibility Studies and Negative Results (RENE) Track Hiroto Sugimori School of Computing, Institute of Science Tokyo, Shinpei Hayashi Institute of Science Tokyo DOI Pre-print | ||

11:00 - 12:30 | Mining Software RepositoriesResearch Papers / Early Research Achievement (ERA) Track / Journal First Track / Reproducibility Studies and Negative Results (RENE) Track at L-1720 Chair(s): Brittany Reid Nara Institute of Science and Technology | ||

11:00 15mTalk | An Empirical Study of Transformer Models on Automatically Templating GitHub Issue Reports Research Papers Jin Zhang Hunan Normal University, Maoqi Peng Hunan Normal University, Yang Zhang National University of Defense Technology, China | ||

11:15 15mTalk | How to Select Pre-Trained Code Models for Reuse? A Learning Perspective Research Papers Zhangqian Bi Huazhong University of Science and Technology, Yao Wan Huazhong University of Science and Technology, Zhaoyang Chu Huazhong University of Science and Technology, Yufei Hu Huazhong University of Science and Technology, Junyi Zhang Huazhong University of Science and Technology, Hongyu Zhang Chongqing University, Guandong Xu University of Technology, Hai Jin Huazhong University of Science and Technology Pre-print | ||

11:30 7mTalk | Uncovering the Challenges: A Study of Corner Cases in Bug-Inducing Commits Early Research Achievement (ERA) Track | ||

11:37 15mTalk | A Bot Identification Model and Tool Based on GitHub Activity Sequences Journal First Track Natarajan Chidambaram University of Mons, Alexandre Decan University of Mons; F.R.S.-FNRS, Tom Mens University of Mons | ||

11:52 15mTalk | Does the Tool Matter? Exploring Some Causes of Threats to Validity in Mining Software Repositories Reproducibility Studies and Negative Results (RENE) Track Nicole Hoess Technical University of Applied Sciences Regensburg, Carlos Paradis No Affiliation, Rick Kazman University of Hawai‘i at Mānoa, Wolfgang Mauerer Technical University of Applied Sciences Regensburg | ||

14:00 - 15:00 | IMC2 - Cyber Security PanelResearch Papers at Amphithéâtre Bernard Lamarre (C-631) Chair(s): Mohammad Hamdaqa Polytechnique Montréal | ||

15:30 - 17:00 | Software SecurityEarly Research Achievement (ERA) Track / Research Papers at L-1710 Chair(s): Sabbir M. Saleh University of Western Ontario | ||

15:30 15mTalk | Characterizing Logs in Vulnerability Reports: In-Depth Analysis and Security Implications Research Papers Yao Shu Wuhan University, Lianyu Zheng Wuhan University, Jinfu Chen Wuhan University, Jifeng Xuan Wuhan University | ||

15:45 15mTalk | Conan: Uncover Consensus Issues in Distributed Databases Using Fuzzing-driven Fault Injection Research Papers Haojia Huang Sun Yat-Sen Universty, Pengfei Chen Sun Yat-sen University, Guangba Yu Sun Yat-sen University, Haiyu Huang Sun Yat-sen University, Jia Chang Huawei, Jun Li Huawei, Jian Han Huawei | ||

16:00 15mTalk | Dissecting APKs from Google Play: Trends, Insights and Security Implications Research Papers Pedro Jesús Ruiz Jiménez University of Luxembourg, Jordan Samhi University of Luxembourg, Luxembourg, Tegawendé F. Bissyandé University of Luxembourg, Jacques Klein University of Luxembourg | ||

16:15 15mTalk | WakeMint: Detecting Sleepminting Vulnerabilities in NFT Smart Contracts Research Papers Lei Xiao Sun Yat-sen University, Shuo Yang Sun Yat-sen University, Wen Chen Energy Development Research Institute, China Southern Power Grid Company Limited, Zibin Zheng Sun Yat-sen University | ||

16:30 7mTalk | On Categorizing Open Source Software Security Vulnerability Reporting Mechanisms on GitHub Early Research Achievement (ERA) Track Sushawapak Kancharoendee , Thanat Phichitphanphong , Chanikarn Jongyingyos Mahidol University, Brittany Reid Nara Institute of Science and Technology, Raula Gaikovina Kula Osaka University, Morakot Choetkiertikul Mahidol University, Thailand, Chaiyong Rakhitwetsagul Mahidol University, Thailand, Thanwadee Sunetnanta Mahidol University | ||

Accepted Papers

| Title | |

|---|---|

| Does the Tool Matter? Exploring Some Causes of Threats to Validity in Mining Software Repositories Reproducibility Studies and Negative Results (RENE) Track | |

| Exploring the Relationship between Technical Debt and Lead Time: An Industrial Case Study Reproducibility Studies and Negative Results (RENE) Track | |

| Hidden Figures in Software Engineering: A Replication Study Exploring Undergraduate Software Students’ Awareness of Distinguished Scientists from Underrepresented Groups Reproducibility Studies and Negative Results (RENE) Track | |

| Revisiting Method-Level Change Prediction: Comparative Evaluation at Different Granularities Reproducibility Studies and Negative Results (RENE) Track DOI Pre-print | |

| Revisiting the Non-Determinism of Code Generation by the GPT-3.5 Large Language Model Reproducibility Studies and Negative Results (RENE) Track |

Call For Papers

The 32nd edition of the International Conference on Software Analysis, Evolution, and Reengineering (SANER 2025) would like to encourage researchers to (1) reproduce results from previous papers and (2) publish studies with important and relevant negative or null results (results which fail to show an effect yet demonstrate the research paths that did not pay off). We would also like to encourage the publication of the negative results or reproducible aspects of previously published work (in the spirit of journal-first submissions). This previously published work includes accepted submissions for the 2025 SANER main track.

Reproducibility studies. The papers in this category must go beyond simply re-implementing an algorithm and/or re-running the artifacts provided by the original paper. Such submissions should at least apply the approach to new data sets (open-source or proprietary). Particularly, reproducibility studies are encouraged to target techniques that previously were evaluated only on proprietary or open-source systems. A reproducibility study should report on results that the authors could reproduce and on the aspects of the work that were irreproducible. We encourage reproducibility studies to follow the ACM guidelines on reproducibility (different team, different experimental setup): “The measurement can be obtained with stated precision by a different team, a different measuring system, in a different location on multiple trials. For computational experiments, this means that an independent group can obtain the same result using artifacts, which they develop completely independently.”

Negative results papers. We seek papers that report on negative results. We seek negative results for all types of software engineering research in any empirical area (qualitative, quantitative, case study, experiment, among others). For example, did your controlled experiment on the value of dual monitors in pair programming not show an improvement over a single monitor? Even if negative, results are still valuable when they are not obvious or disprove widely accepted wisdom. As Walter Tichy writes, “Negative results, if trustworthy, are extremely important for narrowing down the search space. They eliminate useless hypotheses and thus reorient and speed up the search for better approaches.”

Evaluation Criteria

Both Reproducibility Studies and Negative Results submissions will be evaluated according to the following standards:

-

Depth and breadth of the empirical studies

-

Clarity of writing

-

Appropriateness of conclusions

-

Amount of useful, actionable insights

-

Availability of instruments and complementary artifacts

-

Underlined methodological rigor. For example, a negative result due primarily to misaligned expectations or a lack of statistical power (small samples) is not a good submission.

However, the negative result should result from a lack of effect, not a lack of methodological rigor. Most importantly, we expect reproducibility studies to point out the instruments and artifacts the study is built upon clearly, and to provide the links to all the artifacts in the submission (the only exception will be given to those papers that reproduce the results on proprietary datasets that cannot be publicly released).

Submission Instructions

Submissions must be original. It means that the findings and writing have not been previously published or under consideration elsewhere. However, as either reproducibility studies or negative results, some overlap with previous work is expected. Please make that clear in the paper. The publication format should follow the SANER guidelines. Choose “RENE: Replication” or “RENE: NegativeResult” as the submission type.

Submissions will be reviewed following a double-anonymous reviewing process (author names and affiliations must be omitted). Please see the Double-Anonymous instructions on the SANER 2025 Research Papers track page. The EasyChair link for all SANER 2025 tracks is https://easychair.org/conferences/?conf=saner2025.

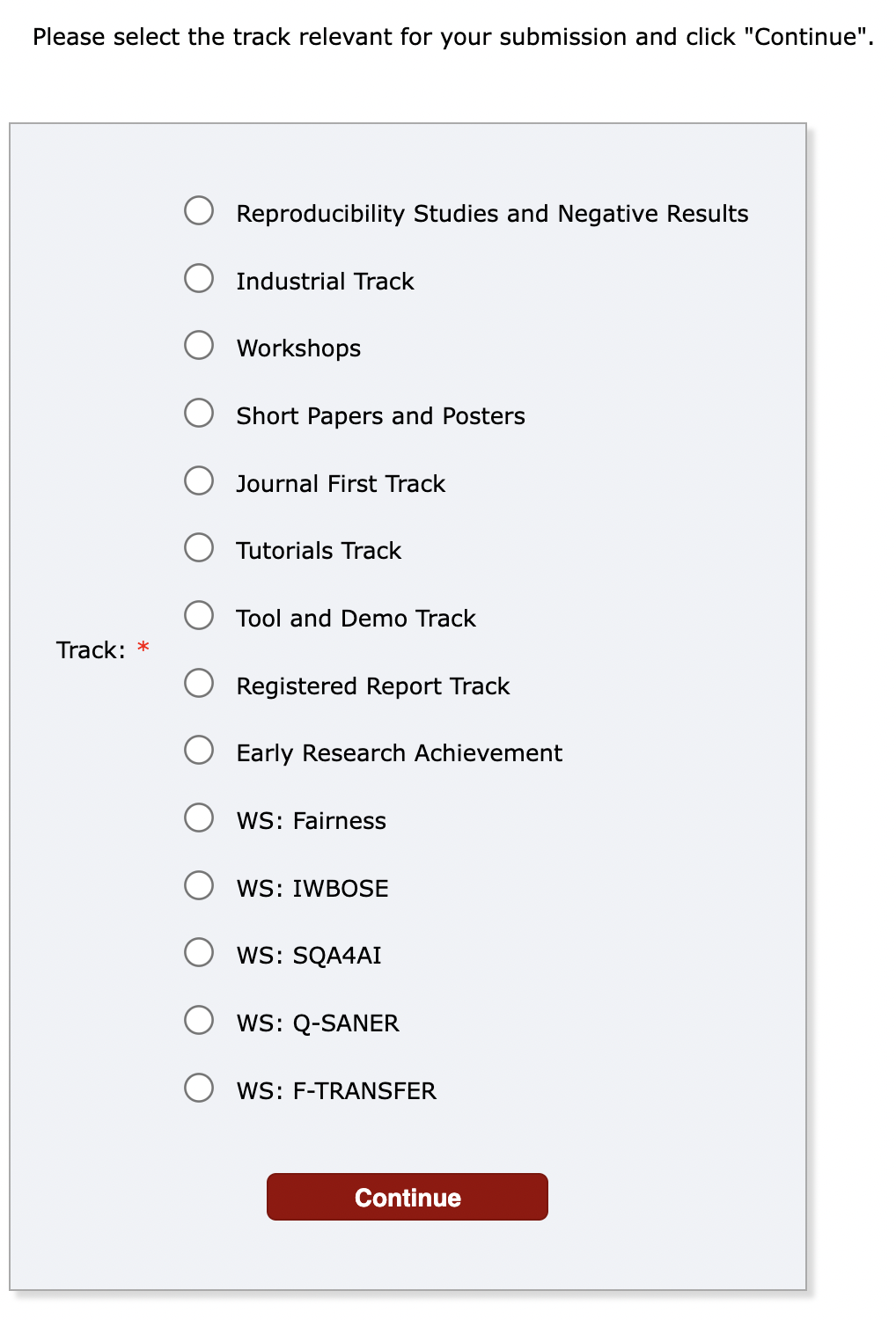

To submit your paper, please use the same submission link. After clicking on “Make a New Submission,” you will be presented with a list of all available tracks. Be sure to select the correct track (e.g., Short Papers and Posters Track), as illustrated in the attached screenshot.

Submission Formats

There are two formats. Appendices to conference submissions or previous work by the authors can be described in 5 pages (including all text, figures, references, and appendices). New reproducibility studies and new descriptions of negative results must not exceed 10 pages (including figures and appendices) plus up to 2 pages that contain ONLY references.

Important Dates

-

Abstract submission deadline: November 7, 2024, AoE

-

Paper submission deadline: November 12, 2024, AoE

-

Notifications: December 20, 2024, AoE

-

Camera Ready: January 24, 2025, AoE